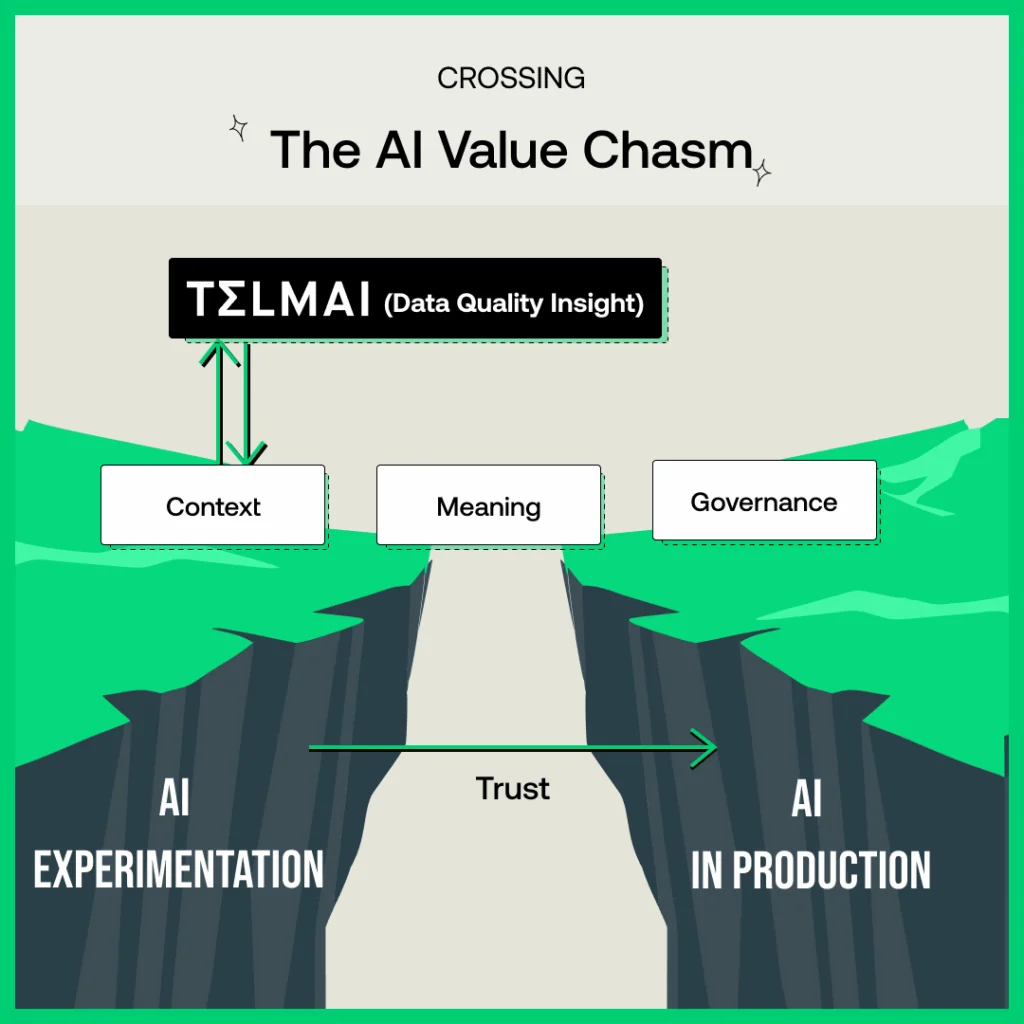

AI pilots fail not because models don’t work, but because data systems lack reliability and context. To scale AI responsibly, enterprises need validated data at the source and metadata enriched with health and governance signals. This article shows how Telmai and Atlan close this gap. Telmai validates data as it lands, while Atlan’s Metadata Lakehouse adds lineage and governance to create the trusted foundation for scaling AI.

With a surge in AI investment, enterprise leaders are under mounting pressure to deliver reliable, scalable AI solutions that create measurable business impact. A recent MIT study found that 95 percent of generative AI projects failed to produce measurable outcomes, as many organizations struggle to move beyond experimentation and into reliable execution. AI pilots are failing to deliver, not because the models don’t work, but because the underlying foundational data systems upon which they are built lack reliability and context.

Autonomous systems and AI agents act on data in microseconds, so there’s no time for late-stage downstream fixes where most companies focus their data quality efforts today. To power AI-native ecosystems at scale, organizations must build trust at the source as data is ingested and ensure that data quality metadata is pushed to data catalogs and metadata systems, allowing agents to evaluate fitness before consumption. This creates the trusted foundation that Autonomous AI products need to operate reliably and at scale.

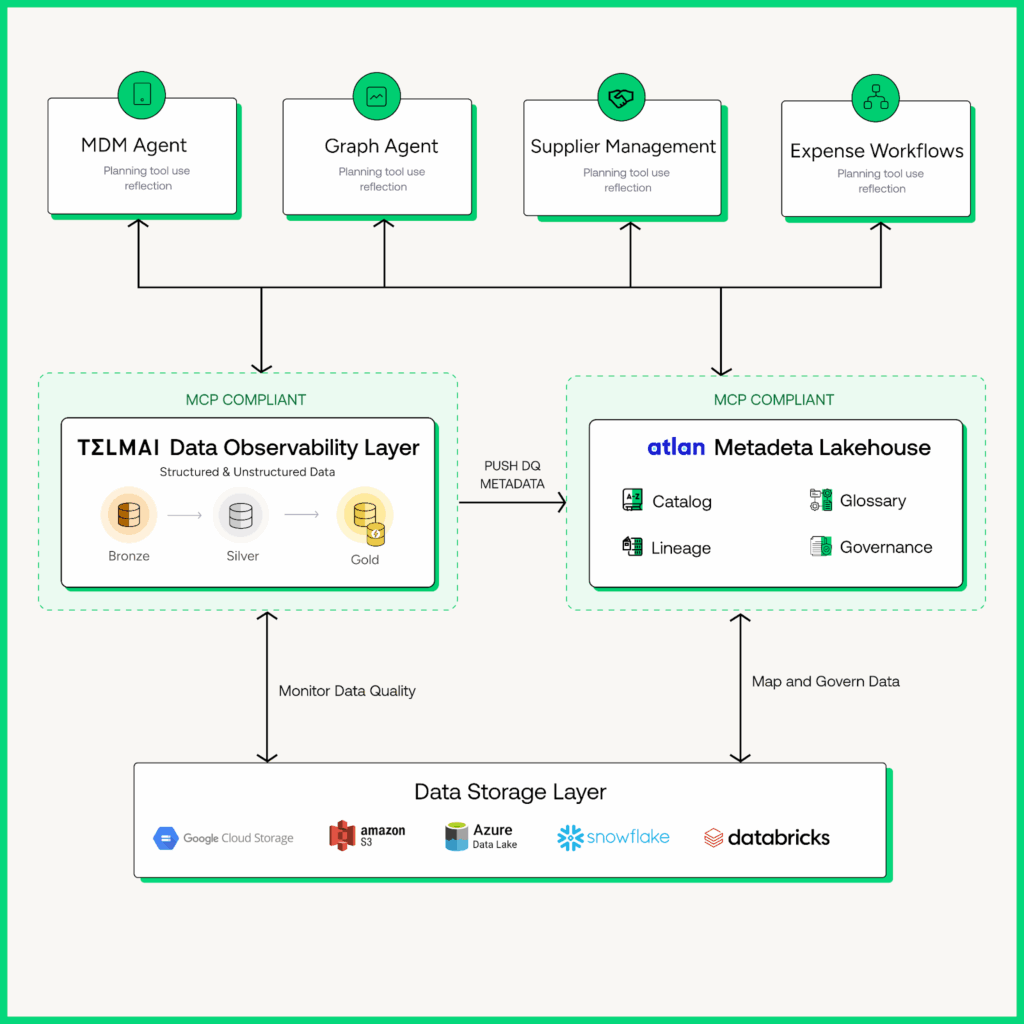

By combining Telmai’s AI-first data quality platform with Atlan’s AI-native metadata and governance platform through Atlan’s App Framework, enterprises gain a seamless way to detect, resolve, and govern data issues directly within the tools their teams already use.

In this article, let’s dive deeper into how they together create a single fabric of trust + context that allows enterprises to move beyond pilots and scale AI responsibly.

Why is real-time validation at ingestion critical for reliable AI?

The failure point for most AI initiatives isn’t in the model, but rather it’s in the underlying data pipeline feeding it. Business Intelligence (BI) is inherently deterministic and descriptive, working with structured historical data to explain what happened through predefined reports and dashboards. AI, in contrast, is non-deterministic and predictive. It consumes both structured and unstructured data to learn patterns, forecast outcomes, and make autonomous decisions.

The old adage “garbage in, garbage out” takes on far higher stakes here. AI and LLMs always identify patterns from the inputs they receive and derive insights without context or judgment. If those inputs are incomplete, drifting, or biased, the model confidently reproduces those flaws at scale.

Traditional data quality approaches were designed for a reporting world, where errors could be corrected after a dashboard broke or a KPI looked suspicious. AI-native workloads break this model entirely. AI and autonomous systems operate at machine speed, where thousands of micro-decisions are made every second. Waiting until the BI or monitoring layer to enforce quality is simply too late, as the damage has already propagated through to downstream business-critical applications.

That’s why ingestion-layer validation has become non-negotiable. Data quality must be ensured before Agentic workflows can access or read the data, not after the data lands in the access layer, at the data lake, or before it enters the lake through event streams. Reliability must be enforced as data is ingested into the lake, especially in open formats like Apache Iceberg and Delta Lake, where it is profiled and validated before being published to production tables.

This is exactly where Telmai comes in. Purpose-built for AI-first architectures, Telmai continuously monitors and validates data through your data pipeline, irrespective of volume or velocity. Telmai’s ML-driven and rule-based checks automatically detect anomalies, schema changes, and data drift before they impact production. Further, Telmai can publish data health KPIs into data catalogs and metadata systems like Atlan, enriching lineage and governance with real-time data quality context.

Agentic AI systems don’t have the luxury of waiting for late-stage fixes. They act in microseconds. That means trust must be built into data at ingestion, and that trust must travel with context across the enterprise, “ said Mona Rakibe, Co-Founder and CEO of Telmai. “With the App Framework, Telmai and Atlan will give teams a trusted data layer ready to power applications and integrations that let AI move beyond pilots and deliver at scale.”

How Atlan extends this trust with context

Trust in data is only half the story. For enterprises to scale AI responsibly, trust must travel with context so every consumer, whether human, system, or AI agent, knows what the data means, where it came from, and how it can be used.

As part of Atlan’s new App Framework, Telmai is now integrated directly into Atlan’s Metadata Lakehouse. For the first time, enterprises can unify monitoring within the same foundation that powers column-level lineage, business-ready data products, AI governance, and policy & compliance monitoring.

With this integration, customers using Telmai and Atlan can:

- Unify trust and context in the Metadata Lakehouse – Telmai’s data quality signals, such as freshness, anomaly detection, schema changes, and volume drift, are automatically surfaced inside Atlan, enhancing lineage and metadata with actionable insights that empower data consumers and AI agents alike.

- Enable true interoperability for agentic AI – For agentic systems to truly scale, interoperability is critical. The tools and services that agents depend on, whether for validation, access, enrichment, or downstream action, must be accessible through a common layer. Atlan delivers this through its open Metadata Lakehouse by providing consistent, versioned context across raw ingestion data and curated data products, ensuring data fitness can be evaluated at every step.

- Enforce policy and compliance at scale – With Telmai’s data quality metadata embedded in the context layer, data trust signals can flow downstream via column-level lineage and bidirectional tag management to other platforms like Databricks, Snowflake, or data access systems. When Data Quality issues are encountered, they can trigger automated governance workflows, ensuring policy compliance and reducing risk across autonomous AI pipelines.

Enterprises can’t scale AI responsibly without a foundation of trust and context. Telmai brings real-time, ingestion-level validation, and Atlan serves as the context layer, ensuring that trust travels with context across every system, workflow, and AI agent.” said Marc Seifer, Head of Global Alliances at Atlan. “Together, Telmai and Atlan are enabling organizations to move beyond pilots and build AI systems that operate reliably, responsibly, and at scale.”

Get AI-Ready—Now

For enterprises to successfully transition AI pilots into production, they need real-time, low-latency access to validated data, along with metadata that carries context about its health, lineage, and governance. Without this foundation, AI agents operate blindly, lacking visibility into whether the data they consume is fit for use, where it originated, or whether they are authorized to access it.

Telmai and Atlan close this gap. Telmai continuously monitors and validates data in open table formats, such as Apache Iceberg and more, as it lands in the lake layer, detecting anomalies and data quality issues before they propagate downstream. It then generates rich observability metadata, which flows into Atlan’s Metadata Lakehouse. There, these signals are combined with lineage, policies, and business glossaries, providing a complete picture of data health and context for both humans and AI agents.

By bringing Telmai’s data quality signals into Atlan’s Metadata Lakehouse, enterprises can now drive measurable impact on their AI implementation with reliability and context fabric that enables AI to scale responsibly.

Want to learn how Telmai and Atlan can work together to scale your existing data infrastructure to be AI-ready? Click here to connect with our team for a personalized demo.

Want to stay ahead on best practices and product insights? Click here to subscribe to our newsletter for expert guidance on building reliable, AI-ready data pipelines.